Figure 3 from the article “Artificial intelligence in government: Concepts, standards, and a unified framework” published in Government Information Quarterly.

Selected publications

- Straub, V. J., Morgan, D., Bright, J. & Margetts, H. Artificial intelligence in government: Concepts, standards, and a unified framework. Gov. Inf. Q. 40, 101881 (2023).

- Straub, V. J. et al. A multidomain relational framework to guide institutional AI research and adoption. In Proc. 2023 AAAI/ACM Conf. AI, Ethics, and Society 512–519 (2023).

- Wong, J., Morgan, D., Straub, V. J., Hashem, Y. & Bright, J. Key challenges for the participatory governance of AI in public administration. In Handbook on Governance and Data Science (eds. Reddick, C. G., Rodriguez-Müller, A. P., Yates, D. J. & Anthony, D. L.) 179–197 (Edward Elgar Publishing, 2025).

Artificial intelligence in government: Concepts, standards, and a unified framework

This article was originally published in Government Information Quarterly in October 2023.

Highlights

- Provides an integrative literature review of the multidisciplinary field of AI in government

- Integrative literature review and concept co-occurrence analysis is used to study 64 different concepts used in technical and social science disciplines to study AI systems

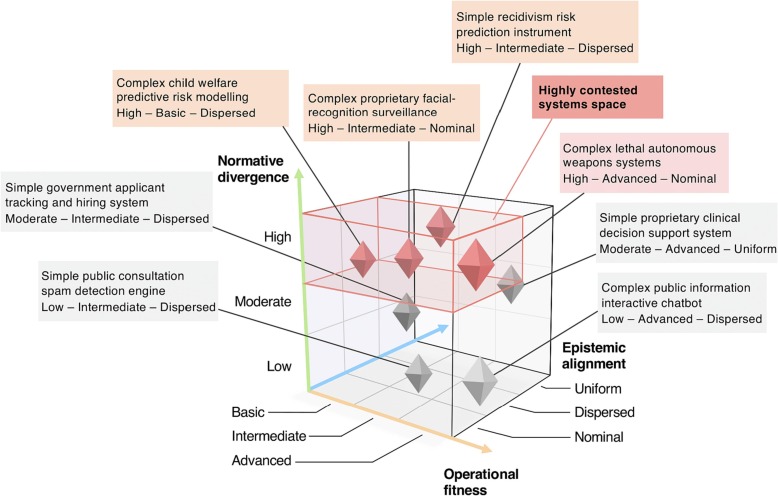

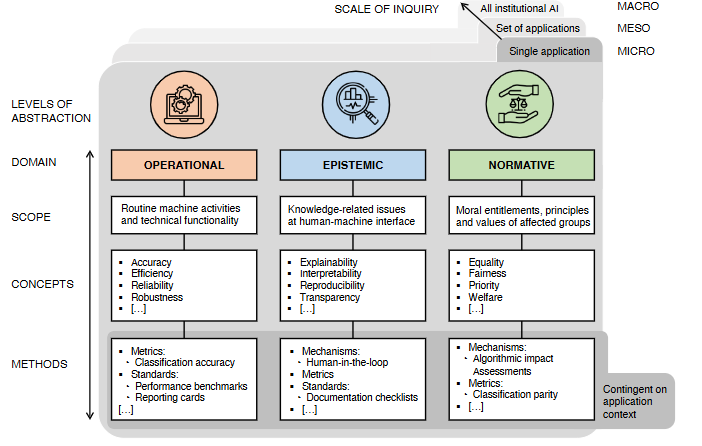

- Three new concepts, operational fitness, epistemic alignment, and normative divergence, are proposed to study AI systems

- A novel conceptual typology framework is developed using the proposed concepts to classify existing AI systems in government

- Proposed concepts are connected to emerging technical AI measurement standards to stimulate policy debate.

Abstract

Recent advances in artificial intelligence (AI), especially in generative language modelling, hold the promise of transforming government. Given the advanced capabilities of new AI systems, it is critical that these are embedded using standard operational procedures, clear epistemic criteria, and behave in alignment with the normative expectations of society. Scholars in multiple domains have subsequently begun to conceptualize the different forms that AI applications may take, highlighting both their potential benefits and pitfalls. However, the literature remains fragmented, with researchers in social science disciplines like public administration and political science, and the fast-moving fields of AI, ML, and robotics, all developing concepts in relative isolation. Although there are calls to formalize the emerging study of AI in government, a balanced account that captures the full depth of theoretical perspectives needed to understand the consequences of embedding AI into a public sector context is lacking. Here, we unify efforts across social and technical disciplines by first conducting an integrative literature review to identify and cluster 69 key terms that frequently co-occur in the multidisciplinary study of AI. We then build on the results of this bibliometric analysis to propose three new multifaceted concepts for understanding and analysing AI-based systems for government (AI-GOV) in a more unified way: (1) operational fitness, (2) epistemic alignment, and (3) normative divergence. Finally, we put these concepts to work by using them as dimensions in a conceptual typology of AI-GOV and connecting each with emerging AI technical measurement standards to encourage operationalization, foster cross-disciplinary dialogue, and stimulate debate among those aiming to rethink government with AI.

Cite: Straub, V. J. et al. A multidomain relational framework to guide institutional AI research and adoption. In Proc. 2023 AAAI/ACM Conf. AI, Ethics, and Society 512–519 (2023). 10.1145/3600211.3604718.

Key challenges for the participatory governance of AI in public administration

This article was originally published in the Handbook on Governance and Data Science on 6 February 2025.

Abstract

As artificial intelligence (AI) becomes increasingly embedded in governmentoperations, retaining democratic control over these technologies is becoming ever morecrucial for mitigating potential biases or lack of transparency. However, while much hasbeen written about the need to involve citizens in AI deployment in publicadministration, little is known about how democratic control of these technologiesworks in practice.This chapter proposes to address this gap through participatory governance, a subset ofgovernance theory that emphasises democratic engagement, in particular throughdeliberative practices. We begin by introducing the opportunities and challenges the AIuse in government poses. Next, we outline the dimensions of participatory governanceand introduce an exploratory framework which can be adopted in the AI implementationprocess. Finally, we explore how these considerations can be applied to AI governancein public bureaucracies. We conclude by outlining future directions in the study of AIsystems governance in government.

Cite: Wong, J., Morgan, D., Straub, V. J., Hashem, Y. & Bright, J. Key challenges for the participatory governance of AI in public administration. In Handbook on Governance and Data Science (eds. Reddick, C. G., Rodriguez-Müller, A. P., Yates, D. J. & Anthony, D. L.) 179–197 (Edward Elgar Publishing, 2025) https://doi.org/10.4337/9781035301348.00017.